Customizing SwiftUI List Selection

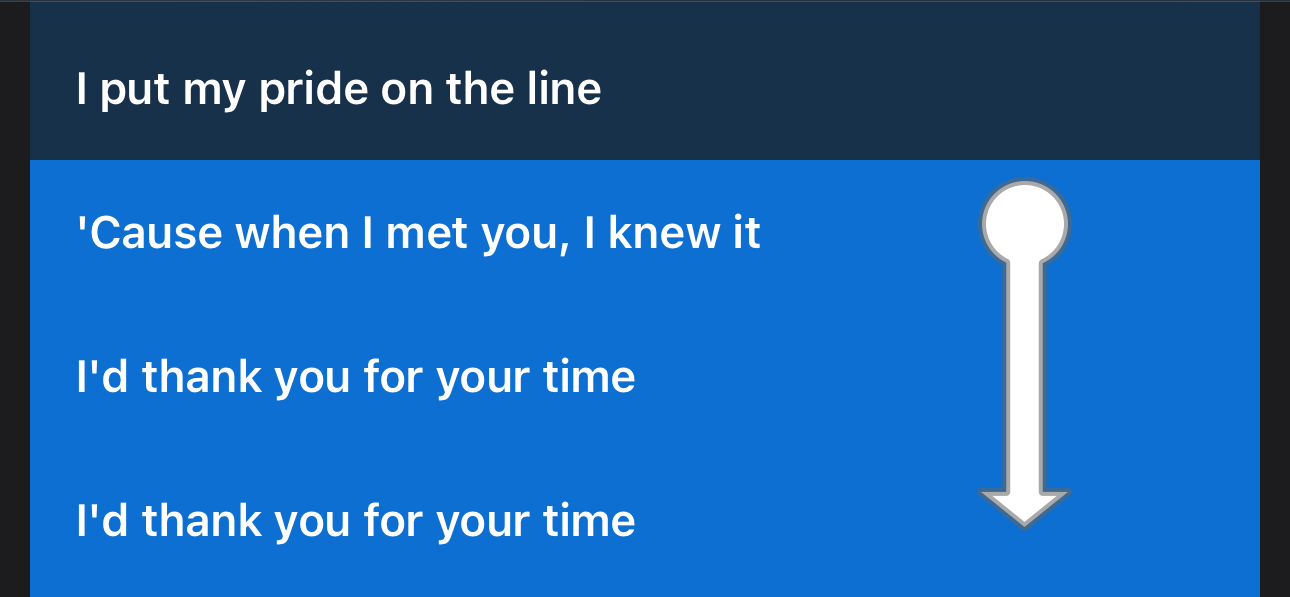

Recently, while working on my new app Lyrigraphy, I wanted to customize the behavior of list selection using gestures. The list was previously using a default SwiftUI List. When the user taps a cell, it would highlight it and add its index to the selected items array. This was simple and worked well, however I wanted to add some custom behavior on top of this.

I wanted the ability to tap and drag across cells to be able to select multiple items in the list at once. Tapping cells one by one can get tedious if you want to select a large number of cells after all. On its face this seemed like a simple ask, this is a fairly common UI pattern. However, this ended up requiring a fair amount of effort and custom logic to accomplish within SwiftUI’s frameworks. This is typical of SwiftUI, the default controls are very simple and easy to use, but extensive customization can be painful.

While I’m not advertising this as the best way to implement a list by any means, this post may be useful if you’re looking to accomplish something similar in SwiftUI. Surprisingly I ended up with something that I’m satisfied with.

If you’re just interested in the source code you can check it out here. Be warned it is a bit messy, but it is self-contained and you can compile and play around with it. Or, you can check out Lyrigraphy on the App Store to try the finished product in the lyrics screen!

System view

The default list implementation does have a multi-selection mode similar to what I’m describing. However, it doesn’t quite meet what I was looking for visually or functionally. First, the list must be in an edit mode which changes its appearance. Second, the draggable area is only on the left side of the list. While technically this could have worked, it wasn’t quite the experience I wanted for this important view in my app. So I decided to delve further into this challenge.

Approach

Since I would essentially need to implement this multi-row selection from scratch, let’s break down what the tap and drag gesture actually entails.

- First a hold gesture needs to be made (~0.5s duration)

- Until the user releases, a drag gesture is recognized

- As the user drags across the screen, we need to update the pending selection of items and color them accordingly on screen using the coordinates of the drag

- If the user is reaching near the top or bottom of the screen, start auto-scrolling the list up or down. There should be a timeout so that it doesn’t repeatedly jump the list.

- When the user releases, we should add (or remove) items from the selection list and update the visual state accordingly.

As you can see there is actually a lot of requirements under the hood for this gesture. Without a system provided view, we will be doing much of the heavy lifting including boundary math with coordinates.

Implementation

These are the APIs that ended up being essential for my implementation:

- DragGesture sequenced with LongPressGesture for gesture recognizers

- highPriorityGesture for applying the gesture

- CoordinateSpace and GeometryProxy to retrieve coordinates within the scroll view

- Using PreferenceKeys with GeometryReader to pass coordinate data back up to the containing view

- ScrollViewReader to control scroll state

It’s worth noting that you should not use a SwiftUI List view for this implementation. Instead use your own ScrollView with a contained ForEach block. A list comes with some nice styling defaults, but it really interferes with a lot of the custom handling required. As one example, it places padding in geometry space between each cell. You can achieve similar list styling with your own modifiers.

PreferenceKeys are at the core of the logic, allowing us to easily reference the exact coordinates of each cell. This allows live updates of the selected items as the gesture is being updated. Setting a custom CoordinateSpace ensures that the coordinates we reference are within the entire scroll view’s context, rather than what is currently on screen. This is important because we autoscroll when reaching the top or bottom of the screen, so the on screen coordinate space of items are not consistent.

We define a struct containing all the fields we need for each cell. We also store the global coordinates because we need to determine if the gesture is currently near the top or bottom of the screen.

struct LinePreferenceData: Equatable {

let index: Int

let minY: Double

let maxY: Double

let globalMinY: Double

let globalMaxY: Double

init(index: Int, bounds: CGRect, globalBounds: CGRect) {

self.index = index

self.minY = bounds.minY

self.maxY = bounds.maxY

self.globalMinY = globalBounds.minY

self.globalMaxY = globalBounds.maxY

}

}

The preference key simply wraps this in the outer view to create an array that we can reference. We aren’t guaranteed that this is in order, so we always reference the index of the LinePreferenceData for reconciliation.

struct LinePreferenceKey: PreferenceKey {

typealias Value = [LinePreferenceData]

static var defaultValue: [LinePreferenceData] = []

static func reduce(value: inout [LinePreferenceData], nextValue: () -> [LinePreferenceData]) {

value.append(contentsOf: nextValue())

}

}

Then in each cell in our ForEach, we place a clear background that sets the PreferenceKey’s values. This is a clever hack, capturing the entire geometry space of the cell without affecting its appearance in the UI.

.background() {

GeometryReader { geometry in

Rectangle()

.fill(Color.clear)

.preference(key: LinePreferenceKey.self,

value: [LinePreferenceData(index: lyric.id,

bounds: geometry.frame(in: .named("container")),

globalBounds: geometry.frame(in: .global))])

}

}

It is critical that the .coordinateSpace modifier is applied to appropriate element in your outer view. It must be set on the VStack inside the ScrollView. If it is placed on the wrong element it causes the geometries to be incorrect, and it is very difficult to debug. I ended up spending several hours on this, and the solution was moving the coordinateSpace designation up just one line.

To bring it all together, we need to configure the gesture. Most of this is straightforward, though we do have some business logic to find where the drag started to determine if we are selecting or unselecting with this drag.

var drag: some Gesture {

LongPressGesture(minimumDuration: 0.5)

.sequenced(before: DragGesture(minimumDistance: 0, coordinateSpace: .named("container")))

.updating($isDragging, body: { value, state, transaction in

switch value {

case .first(true):

break

case .second(_, let drag):

guard let start = drag?.startLocation else { return }

let end = drag?.location ?? start

if isDraggingSelected == nil {

let dragStartIndex = getStartIndex(from: start.y)

isDraggingSelected = dragStartIndex.map { viewModel.selectedLyricIndexes.contains($0) } ?? false

}

handleDragChange(start: start, end: end)

default:

return

}

})

.onEnded { value in

switch value {

case .first(true):

// Long press succeeded

isSelecting = true

case .second(true, _):

// Drag ended

updateSelectionRange()

isSelecting = false

isDraggingSelected = nil

default:

break

}

}

}

And finally, implement the method to handle update events to the gesture.

private func handleDragChange(start: CGPoint, end: CGPoint) {

let minY = min(start.y, end.y)

let maxY = max(start.y, end.y)

// Clear the pending selection before recalculating

pendingSelection.removeAll()

// Find all lines that intersect with the drag range

let selectedLines = lineData.filter { line in

return !(line.maxY < minY || line.minY > maxY)

}

let currentLine = lineData.first { line in

return end.y >= line.minY && end.y <= line.maxY

}

pendingSelection = Set(selectedLines.map { $0.index })

if let currentLine {

handleAutoScroll(currentY: currentLine.globalMaxY, index: currentLine.index)

}

}

We use the ScrollViewProxy to trigger scrolling up or down. ScrollViewProxy only allows you to target specific elements, so I decided to scroll up or down 3 items from the current position (with bounds checking). We use a boolean state variable with a dispatch queue asyncAfter to isolate scrolling so that it doesn’t continually re-trigger.

There are some other small details not mentioned like coloring, gesture state, etc. But this is the gist of the implementation. It is very possible this implementation is not well optimized for a very large number of items, I was not designing for that use case in my app. The full code is a bit messy, but figured it would be worth publishing in case it’s useful to anyone to reference.

Appendix: a note on LLMs

As a side note, I also utilized LLMs throughout this process and saw both areas where they excel and struggle with this iteration process. Overall the models are familiar with SwiftUI, but sometimes require prompting to use the latest features. This is likely an aspect of a lot of open source code targeting older iOS versions. And of course with more difficult issues, it will frequently hallucinate out of the problem with non-existent APIs which you need to be careful of.

If you have a high level understanding of which tools to use, you can improve prompts by including specific APIs or design patterns you are trying to utilize. For example, “Use the @Observable macro instead of extending ObservableObject directly”. Directly linking to a specific Apple Documentation pages can also be beneficial. However, they do tend to struggle with more advanced logic regardless. Even newer thinking models like R1 were not able to debug some of the issues I encountered.

With a simple prompt describing my requirements and providing the existing view code, Claude was able to provide a solid high level approach. I started with very simple prompts like “How do I make it so that when you tap (and hold) and drag it allows you select multiple items at a time?”. Of course many, many details were wrong and it required tons of iteration. But with some follow up prompting, I was able to find my building blocks including some APIs I had not heard of before.

My general iteration steps were to:

- Provide simple instructions to the model on my goal with my existing code

- Review output, incorporate relevant functionality into my implementation

- Re-prompt with new issues or bugs with the new implementation

- Manually correct bugs and continue to iterate

A lot of its suggestions were valid at a surface level. But none of the models were very good at thinking through the overall functionality of a complex view, or debugging complex performance and logic issues. All the models (including thinking models) struggled to make any progress with esoteric SwiftUI bugs, or logical boundary math using coordinates.

Once I got to a high level of refinement, I had more luck manually scrutinizing the view and debugging. The models would give me seemingly increasingly random things to try, with high certainty in their incorrect theories. While you can prompt for assistance with high level debugging theories, it was not that useful for resolving detailed technical issues. To be fair, I found this task to be generally challenging as a human as well.

Also, using Xcode the LLM tooling is just much slower and tedious than other tools. My general workflow was just copy/pasting text between Xcode and the Anthropic console.